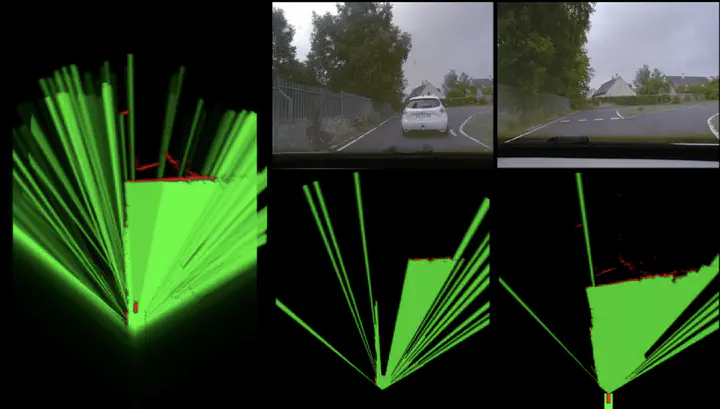

Three colums, from the left, EgoVehicle’s Mapgrid (fusion), EgoVehicle’s camera and Scangrid, OtherVehicle’s camera and Scangrid

Three colums, from the left, EgoVehicle’s Mapgrid (fusion), EgoVehicle’s camera and Scangrid, OtherVehicle’s camera and Scangrid

In the field of autonomous navigation, perception of the driving scene is one of the essential elements. Existing solutions combine on-board exteroceptive sensors, and are capable of understanding some of the near vehicle’s dynamic surrounding environment. Furthermore, the perception capability of each vehicle can be enhanced by wireless information sharing if vehicles in the neighborhood transmit pertinent information. The primary benefit of such approach is to enable and improve the performance–safety of cooperative autonomous driving. The introduction of such a vehicle-to-vehicle communication leads to think of the global architecture as a system of systems. In this work, we address the task of evidential occupancy grid fusion so that a given vehicle can refine and complete its occupancy grid with the help of grids received from other near vehicles. The communication channel is supposed to be ideal, noiseless with infinite capacity. We focus on the fusion framework itself, using the theory of belief functions for reasoning with uncertainties on the relative poses of the vehicles and on the exchanged sensor measurement data. We evaluate the fusion system with real data acquired on public roads, with two connected vehicles.